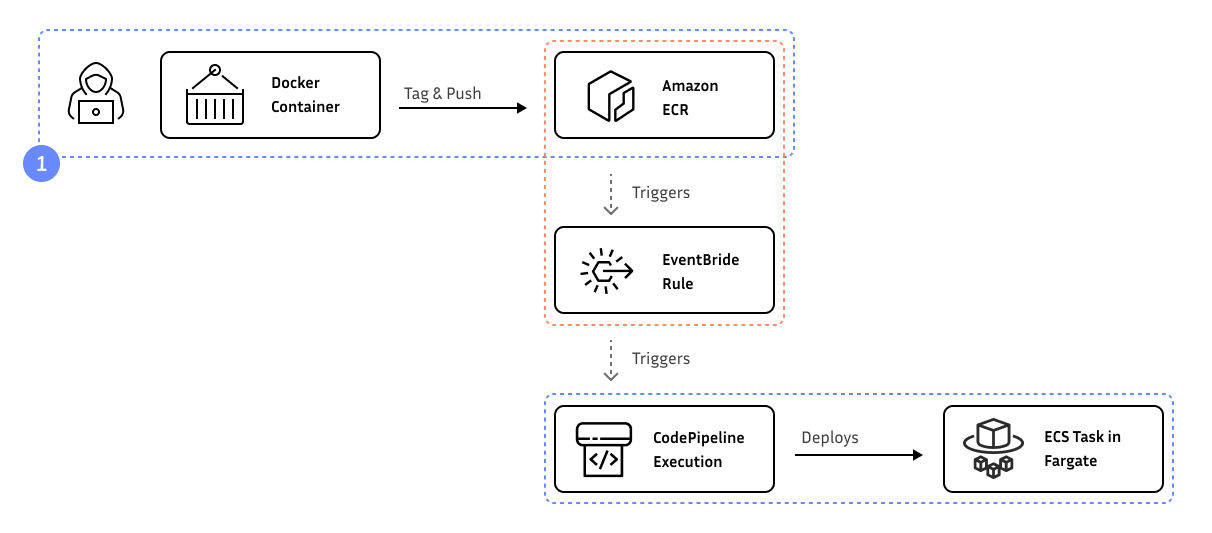

Amazon Fargate is great for running containers without having to manage the underlying infrastructure. Using AWS Code Pipeline, you can update your running Fargate service whenever you push a new container version to the Amazon Container Registry.

The Docker Container

Based on the sources for running a serverless container with Fargate, we will add a CodePipeline to the stack, which will deploy the container to Fargate. But first, let’s update the Docker container to include a version identifier.

# Update index.html to include a version identifier

$ > echo "Welcome. Running {version}" > src/index.html

Next, update the Dockerfile to expect the version identifier:

FROM nginx:latest

# Add build argument declaration with empty default

ARG VERSION=""

# Validate that VERSION is provided

RUN [ ! -z "$VERSION" ] || (echo "VERSION build argument is required" && false)

COPY ./src/index.html /usr/share/nginx/html/index.html

# Replace {version} placeholder with VERSION build arg

RUN sed -i "s/{version}/${VERSION}/g" /usr/share/nginx/html/index.html

When building the container, you can now pass the version identifier:

# Build the container locally with a version identifier

$ > docker build --build-arg VERSION=1.0.0 . -t app

# Run the container locally

$ > docker run -p 8080:80 app

# Verify that the version identifier is included in the output

$ > curl localhost:8080

Welcome. Running 1.0.0

Nice; next we need to push the container to the Amazon Container Registry and we want to have this version deployed to Fargate automatically.

AWS CodePipeline

Let’s start with creating a new CodePipeline in the existing stack and configure the Amazon ECR repository to be used as the source.

const pipeline = new aws_codepipeline.Pipeline(stack, "DeployPipeline", {

pipelineName: "serverless-container",

});

const sourceOutput = new aws_codepipeline.Artifact();

const sourceAction = new aws_codepipeline_actions.EcrSourceAction({

actionName: "ECR",

repository: repository,

imageTag: "latest",

output: sourceOutput,

});

The buildAction is slightly more complex, as we need it to build to Fargate TaskDefinition file; but it’s mostly boilerplate. This is using a CodeBuild project to build the TaskDefinition file and stores the artifact in a S3 bucket:

const buildOutput = new aws_codepipeline.Artifact();

const buildProject = new aws_codebuild.PipelineProject(stack, "BuildProject", {

buildSpec: aws_codebuild.BuildSpec.fromObject({

version: "0.2",

phases: {

build: {

commands: [

'echo "[{\\"name\\":\\"web\\",\\"imageUri\\":\\"${REPOSITORY_URI}:${IMAGE_TAG}\\"}]" > imageDefinitions.json',

],

},

},

artifacts: {

files: ["imageDefinitions.json"],

},

}),

environment: {

buildImage: aws_codebuild.LinuxBuildImage.STANDARD_5_0,

},

environmentVariables: {

REPOSITORY_URI: {

value: repository.repositoryUri,

},

IMAGE_TAG: {

value: "latest",

},

},

});

const buildAction = new aws_codepipeline_actions.CodeBuildAction({

actionName: "BuildImageDefinitions",

project: buildProject,

input: sourceOutput,

outputs: [buildOutput],

});

And finally, we create a deployAction to deploy the container to Fargate:

const deployAction = new aws_codepipeline_actions.EcsDeployAction({

actionName: "Deploy",

service: service.service,

imageFile: buildOutput.atPath("imageDefinitions.json"),

});

Adding all the actions to the pipeline is straightforward:

pipeline.addStage({

stageName: "Source",

actions: [sourceAction],

});

pipeline.addStage({

stageName: "Build",

actions: [buildAction],

});

pipeline.addStage({

stageName: "Deploy",

actions: [deployAction],

});

Of course, CodePipeline needs to have access to the repository to pull the container image:

repository.grantPull(pipeline.role);

Run cdk deploy and you should have a working pipeline. You can run this manually using the AWS Management Console or - of course- use the AWS CLI to trigger the pipeline.

$ > aws codepipeline start-pipeline-execution --name serverless-container

Of course, without pushing a new container version, this will result in no changes to the running service. But the pipelines should still work fine and finish successfully.

Trigger CodePipeline on ECR Push

Amazon ECR sends events to an EventBridge event bus, which can be used to trigger a CodePipeline execution. To trigger the pipeline, we first need to create a role that has the necessary permissions:

const roleECRTrigger = new aws_iam.Role(stack, "RoleECRTrigger", {

assumedBy: new aws_iam.ServicePrincipal("events.amazonaws.com"),

});

roleECRTrigger.addToPolicy(

new aws_iam.PolicyStatement({

actions: ["codepipeline:StartPipelineExecution"],

resources: [pipeline.pipelineArn],

})

);

Next, we can create the rule on EventBridge to trigger the pipeline when a new image is pushed to the repository:

new aws_events.Rule(stack, "EventRuleECRTrigger", {

eventPattern: {

source: ["aws.ecr"],

detail: {

"repository-name": [repository.repositoryName],

"image-tag": ["latest"],

action: ["PUSH"],

},

},

targets: [

new aws_events_targets.CodePipeline(pipeline, {

eventRole: roleECRTrigger,

}),

],

});

As always, run cdk deploy to deploy the changes to your AWS. Now it’s time to finally push a new version of the container to ECR and see the pipeline in action.

# Build the container locally with a version identifier

$ > docker build --build-arg VERSION=1.0.1 . -t app

# Add required tags

$ > docker tag app 120032447123.dkr.ecr.us-east-1.amazonaws.com/serverless-container:latest

# Login to ECR and push container

$ > aws ecr get-login-password --region us-east-1 | docker login --username AWS --password-stdin 120032447123.dkr.ecr.us-east-1.amazonaws.com

$ > docker push 120032447123.dkr.ecr.us-east-1.amazonaws.com/serverless-container:latest

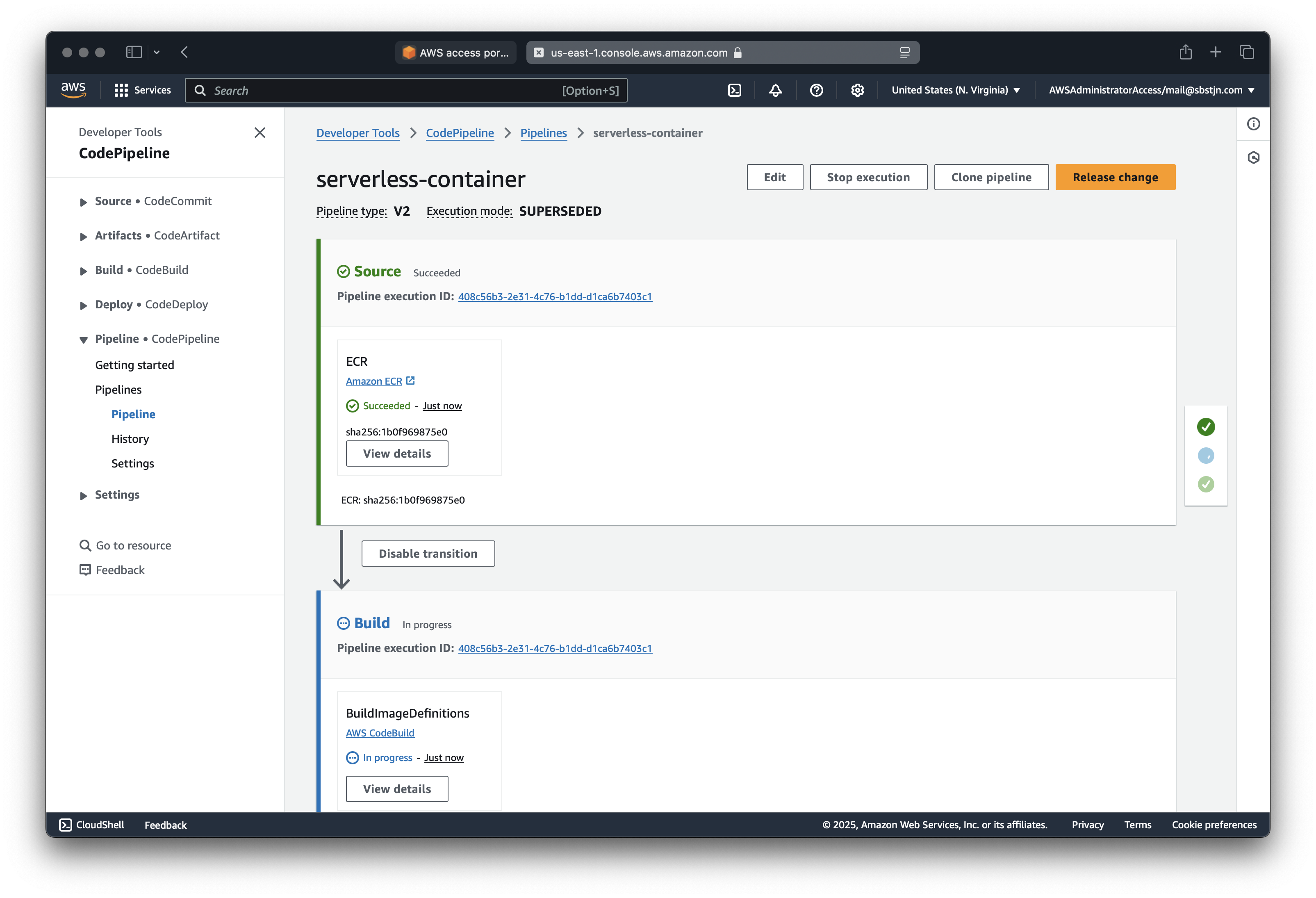

Switch back to the AWS Management Console and you should see the pipeline being triggered.

After the pipeline has finished, the new container is running:

$ > curl https://serverless-container.aws.sbstjn.com

Welcome. Running 1.0.1

And there you have it; 🎉 a fully automated pipeline that will deploy a new version of your container to Fargate whenever a new version is pushed to the Amazon Container Registry.

Further Reading

This is based on the running a serverless container with Fargate on ECS guide. Did you read about DNS Failover with Route 53 and static content on CloudFront and S3?