Based on running a serverless container with Fargate, we want to add automated DNS failover handling with Route 53. Whenever the health check for the Fargate service on Amazon ECS fails, the DNS records point to a static website on Amazon S3; served with CloudFront.

Using AWS CDK: Serverless Container with Fargate as a starting point, we will add the failover handling to Route 53.

Existing DNS Configuration

The baseline for Route 53 is already created in the previous guide; it contains a public hosted zone and a record set using an alias target for the LoadBalancer of the Fargate service.

const zone = new aws_route53.PublicHostedZone(stack, "HostedZone", {

zoneName: "serverless-container.aws.sbstjn.com",

});

new aws_route53.RecordSet(stack, "RecordSet", {

zone,

recordName: "serverless-container.aws.sbstjn.com",

recordType: aws_route53.RecordType.A,

target: aws_route53.RecordTarget.fromAlias(

new aws_route53_targets.LoadBalancerTarget(service.loadBalancer)

),

});

Whenever the health check for the service on ECS fails, DNS records will be updated to point to a static website using Amazon S3 and CloudFront.

Static Website as Fallback

Therefore, of course we need to create an Amazon S3 Bucket and a CloudFront distribution first. With using the Origin Access Identity, the default permissions for the bucket are private and the content is only accessible by the CloudFront distribution. Luckily, the Origin Access Identity is created automatically when creating the CloudFront distribution using the AWS Cloud Development Kit (CDK).

const bucket = new aws_s3.Bucket(stack, "FallbackStorage", {

blockPublicAccess: BlockPublicAccess.BLOCK_ALL,

accessControl: BucketAccessControl.PRIVATE,

});

new CfnOutput(stack, "FallbackStorageBucketName", {

value: bucket.bucketName,

});

const distribution = new aws_cloudfront.Distribution(stack, "Distribution", {

defaultRootObject: "index.html",

defaultBehavior: {

origin:

aws_cloudfront_origins.S3BucketOrigin.withOriginAccessControl(bucket),

viewerProtocolPolicy: aws_cloudfront.ViewerProtocolPolicy.REDIRECT_TO_HTTPS,

},

domainNames: ["serverless-container.aws.sbstjn.com"],

certificate: certificate,

});

new CfnOutput(stack, "DistributionDomainName", {

value: distribution.domainName,

});

When passing the certificate to the CloudFront Distribution, make sure it’s created in the us-east-1 region of AWS. This is required for the CloudFront distribution to work. Run cdk deploy and use the outputs of the Amazon S3 bucket and the CloudFront distribution next:

$ > cdk deploy

[…]

✅ serverless-container

✨ Deployment time: 31.96s

Outputs:

serverless-container.DistributionDomainName = ac39ca34gda.cloudfront.net

serverless-container.FallbackStorageBucketName = your-bucket-name

# Create source files

$ > mkdir -p fallback

$ > echo "Fallback." > fallback/index.html

# Upload to S3

$ > aws s3 cp fallback/ s3://your-bucket-name --recursive

upload: fallback/index.html to s3://your-bucket-name/index.html

Now, when accessing the domain name of the CloudFront distribution, you should see the fallback HTML file:

$ > curl https://ac39ca34gda.cloudfront.net

Fallback.

Route 53 Failover

Now, we need to add the failover handling to Route 53. Whenever the health check for the service on ECS fails, DNS records will be updated to point to the static website using Amazon S3 and CloudFront.

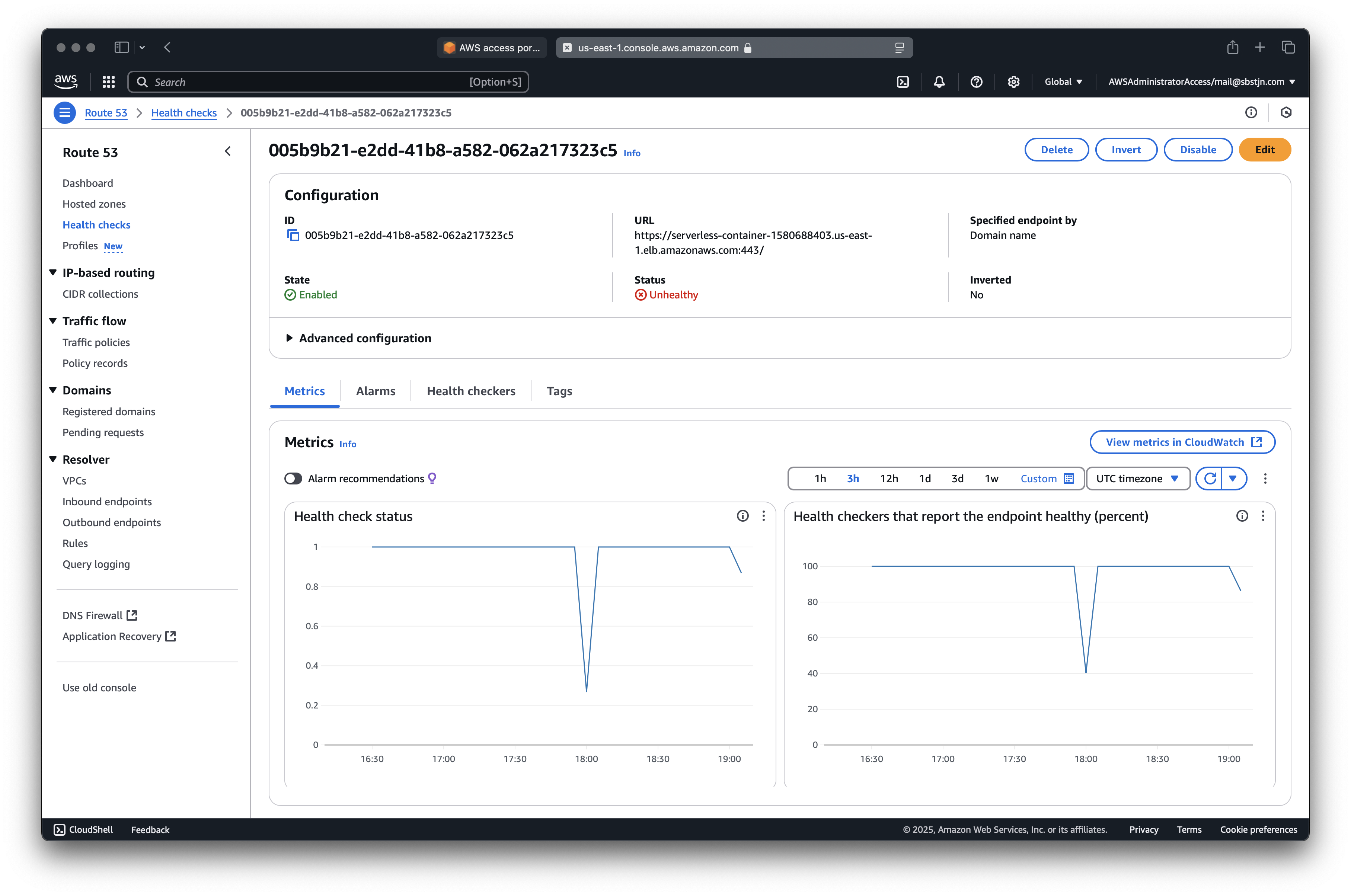

Route 53 Health Check

First, we need to create a health check for the service on ECS. This is done using the AWS Cloud Development Kit (CDK).

const healthcheck = new aws_route53.CfnHealthCheck(stack, "HealthCheck", {

healthCheckConfig: {

type: "HTTPS",

failureThreshold: 3,

port: 443,

resourcePath: "/",

fullyQualifiedDomainName: service.loadBalancer.loadBalancerDnsName,

enableSni: false,

regions: ["us-east-1", "us-west-1", "eu-west-1"],

requestInterval: 10,

measureLatency: true,

},

});

Next, remove the existing aws_route53.RecordSet! After a fresh deployment, the health check should be created and the DNS records are temporarily removed. And finally, we create two new Route 53 records:

Primaryis pointed to the LoadBalancer of the Fargate service, andSecondaryis pointed to the CloudFront distribution.

Thanks to referencing the health check ID, Route 53 will automatically update the DNS records when the health check of the Fargate service fails. Route 53 alias targets for LoadBalancers and CloudFront distributions require a magic HostedZone ID provided by AWS:

- Hosted Zone for LoadBalancers depends per region,

e.g.Z35SXDOTRQ7X7Kforus-east-1 - Hosted Zone for CloudFront Distributions is

Z2FDTNDATAQYW2

With this information, add the RecordSets to the stack (the service variable is created in the previous guide about running a serverless container with Fargate on ECS):

new aws_route53.CfnRecordSet(stack, "RecordSetPrimaryLoadBalancer", {

type: "A",

aliasTarget: {

dnsName: service.loadBalancer.loadBalancerDnsName,

hostedZoneId: "Z35SXDOTRQ7X7K",

},

failover: "PRIMARY",

hostedZoneId: zone.hostedZoneId,

name: zone.zoneName,

setIdentifier: "primary",

healthCheckId: healthcheck.attrHealthCheckId,

});

new aws_route53.CfnRecordSet(stack, "RecordSetSecondaryFailover", {

type: "A",

aliasTarget: {

dnsName: distribution.domainName,

hostedZoneId: "Z2FDTNDATAQYW2",

},

failover: "SECONDARY",

hostedZoneId: zone.hostedZoneId,

name: zone.zoneName,

setIdentifier: "secondary",

});

Now, after a final deployment, the DNS records should be updated to point to the running Fargate service. If the health check fails, the DNS records will be updated to point to the static website on Amazon S3; served with CloudFront. Let’s test this:

$ > curl https://serverless-container.aws.sbstjn.com

Welcome.

Head over to the AWS Management Console and update your Fargate service on EC2. Using the Update Service button, you can set the desired number of tasks to 0. This will stop the Fargate service and the health check will fail.

You can also use the AWS CLI to update the desired number of tasks:

$ > aws ecs update-service \

--cluster serverless-container \

--service serverless-container \

--desired-count 0

After all running instances are terminated, the DNS records will be updated to point to the static website on Amazon S3; served with CloudFront.

$ > curl https://serverless-container.aws.sbstjn.com

Fallback.

Nice! 🤩 Now set the desired number to a bigger integer again and wait for your DNS records to be updated again. It’s that simple. Have fun! 🎉

Further Reading

This is based on the running a serverless container with Fargate on ECS guide. Next, deploy new containers automatically when you push them to the container registry.